Table of Contents

What is load balancing? If you don’t know the difference between an elastic load balancer and an internet load balancer, and you have no idea what the terms soft load balancing and round robin mean, we can help! This article will teach you the basics of load balancing, which is a critical component of maintaining a farm web server resources and preventing lag or downtime on your servers.

Table of Contents

What is load balancing?

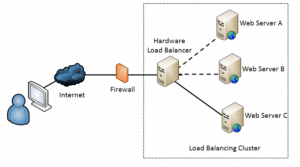

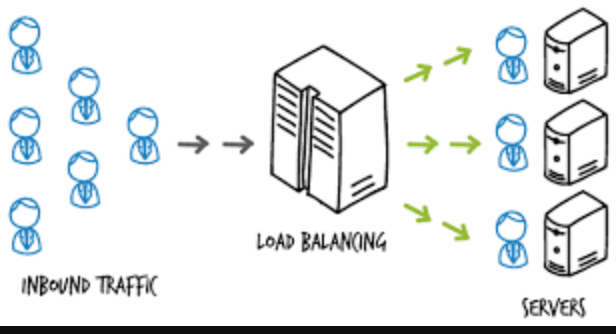

Load balancing, or network load balancing, is when a system administrator or network load balancer tracks the network traffic that is coming in and distributes it as efficiently as possible across multiple backend servers, which are often called a server pool or server far. There are services and softwares to assist with this to increase a website's or application's performance.

Here is a basic video explanation:

Why is load balancing necessary?

Essentially, it helps improve internet and website speed.

A lot of today’s most popular websites see an insanely high volume of traffic. Many of them see millions of requests coming in simultaneously from multiple different clients and users across the world. They need to be able to respond appropriately, offering the correct images, video, data, and text for the query, and this needs to happen quickly so end users do not experience lag, which is the death of a site’s popularity. People expect things to load within 3 seconds, but loading within 2 is much better.

The best way to handle this and meet the requisite volumes of traffic, most companies add more servers to their network. They need a sort of metaphorical crossing guard to perform effective server load balancing.

This person or software is monitoring your individual servers and sending specific requests across multiple servers, depending on which one is most easily and efficiently able to handle the client request. This will maximize the speed of response time across your network and allow you to handle high capacity loads without overworking your servers and having them break down on you. Load balancing work like this:

Load balancers work will distribute and processing client requests across a network and efficiently distribute the workload among multiple servers. They will make sure that all of the servers are reliable, and functioning without downtime, by sending your requests to available and online servers. They also allow you the flexibility of adding and removing servers based on demand.

Session Persistence

Session persistence is when an end user's information and session data gets stored on their local browser. This is seen most frequently with online store multiple sites that have a shopping cart function. The user can store items in their shopping cart and come back to find them when they are ready to check out and make their purchase.

Session persistence is when an end user's information and session data gets stored on their local browser. This is seen most frequently with store sites that have a shopping cart function.

The user can store items in their shopping cart and come back to find them when they are ready to check out and make their purchase.

This means you need to make sure they are assigned to their server. If you change the server handling their request before they finish shopping and checking out, then you can cause a problem. The transaction will fail to go through and the customer will lose everything. For these special cases, the entire set of requests from that particular client must all be sent to the same server for the entirety of their session. This is what is known as session persistence.

Dynamic Configuration of Server Groups

A lot of new apps put a lot of demand on servers. They will require new servers to be added or removed very regularly. This is a very common practice in Elastic Compute Cloud (EC2) or Amazon Web Services (AWS) apps. This is because those systems allow the end user to pay for the computational power they use, rather than paying for extra bandwidth they never need.

This is convenient for users, but unfortunately, if their traffic patterns are varied or unpredictable, it means that you will need to constantly handle it when their traffic hits a spike and needs new stuff. A load balancing router will need to be able to add or remove servers from the group of networked servers without disrupting any current active connections algorithm.

Hardware vs Software Load Balancing Solutions

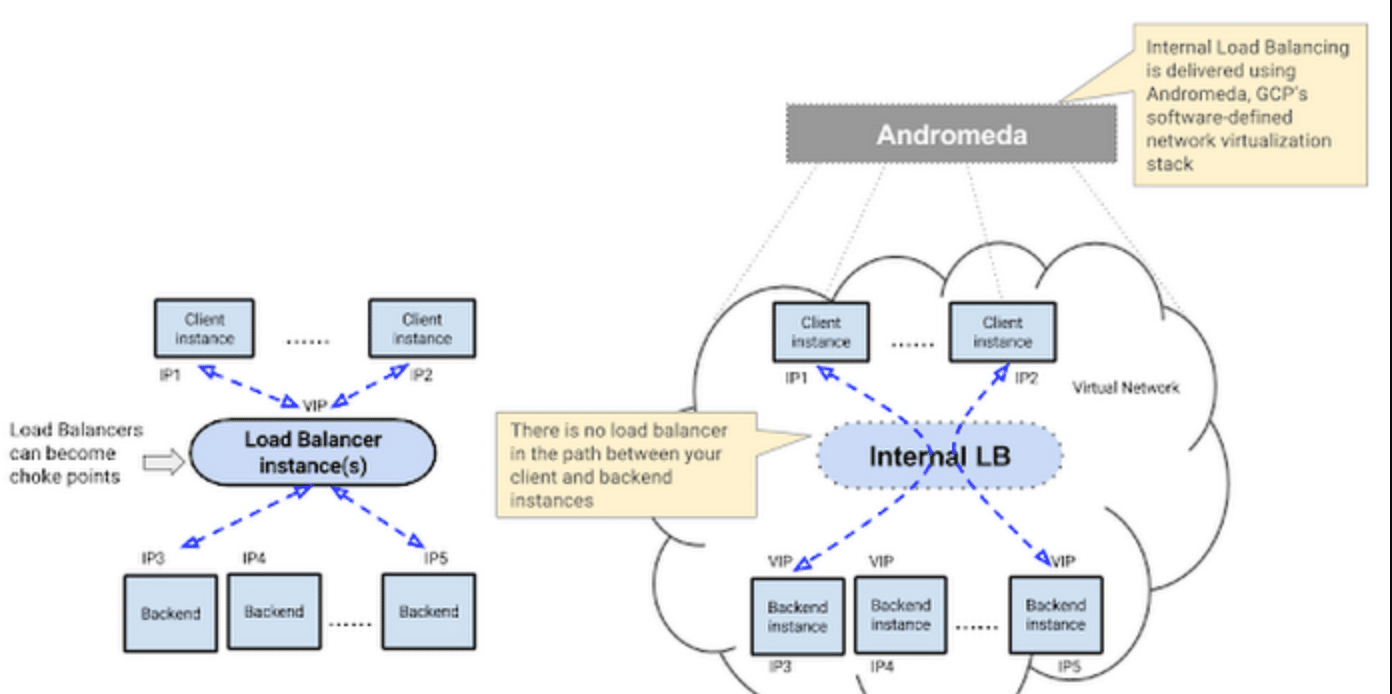

Load balancers are usually either hardware based load balancers or software-based load balancers. With specialized hardware solutions, a vendor will install their proprietary software on a provided machine that contains special processors. If you want to scale up when your traffic increases, you will have to buy more machines from the vendor.

Software systems are a lot nicer. These will run on the hardware you have, based on commodity. This means that they tend to be much cheaper and a lot more flexible when it comes to traffic patterns. You can install the software on whatever hardware appliance you want to, or even in cloud environments, where it can do a lot of good!

What are Load Balancers and How Do They Work?

Load balancing refers to the process of efficiently spreading incoming network traffic over a collection of backend servers, sometimes referred to as a server farm or server pool. There are a few different types of load balancers. Elastic load balancing will scale your traffic to the application. It will recognize the fact that different times have different demands. This method performs regular system health checks and uses that information to assess servers and route traffic appropriately.

Geographic load balancing will distribute traffic across multiple different data centers. Each of these centers hosts different servers and the top hosts can help you take care of load balancing very easily according to Mangomatter. This increases security because it has multiple data centers. However, remember that you'll still want to utilize security best practices so that your application isn't vulnerable to packet and network sniffers.

Virtual load balancers will mimic the load balancing features of software-based infrastructures. These will run the physical load balancing software and apply it from a virtual machine instead of a physical one.

Popular Load Balancing Algorithms

There are several different ways to balance traffic on the internet. Some common types of algorithms tend to work better than many others depending on the type of requests and volume you receive to better analyze the large amount of data incoming. Let’s look at them now.

1. Round Robin

When an administrator uses a Round Robin load balancing algorithm, they are distributing a request to each server. It's like taking turns. A request comes in, gets sent to the first server. That server takes the user requests, responds, and moves to the back of the line.

The next request will go to the next server. That server receives, responds, and moves to the back of the line. This process continues all the way through. Eventually, the first server will be back in the front of the line, and the process continues this way, looping infinitely.

2. Least Connections Method

This is a highly efficient method where the administrator will assess which server has the fewest current connections open with clients and send the new request there. It takes into account the computing capacity of each server when it determines this. For example, if a server can handle 10 connections at a time, and currently has 2 connections, and another server can handle 20 connections at a time, and currently has 4 connections, then the network will determine that both of these have the same number of connections when determining the workload.

Supposing a third server that can handle 10 connections and has 5, and a fourth server that can handle 20 connections and has 2, the system would determine that the next request that comes in should be sent to the server that can handle 20 connections and only has 2.

Now, you have Server 1 at 2 out of 10 connections, Server 2 at 4 out of 20 connections, Server 3 at 5 out of 10 connections, and Server 4 at 3 out of 20 connections. The next job would also go to Server 4. This leaves servers 1, 2, and 4 at the same relative amount of connections and Server 3 at a higher rate. The next job that comes in would get sent to any of either server 1, 2, or 4. For this example, we will send it to 4.

Once that number increases, the next job would be sent to Server 1 or 2, and for this purpose, we will use Server 2. Now, the next job that comes in will only be sent to Server 1, because all the other servers are at a higher relative capacity.

3. Least Response Time Method

With the least response time method, the traffic is sent to the server that has the fewest action connections and the lowest average response time. Basically, this method factors in how tired a server is. It’s like employees.

Let’s say you have 2 employees, and both of them can work the same number of hours, and both of them have the same relative level of skill, but one of them hasn’t been feeling good and is sick. That employee’s response time is not going to be as fast as the employee who is in good health, even though they can both handle the same amount of work on a good day.

This method considers the server’s activity as well as the current load capacity. A lot of times, this method can be used to break the 3-way ties that are seen in the Least Connection Method. If all other factors are equally balanced, this shift traffic to different web servers that will be the quickest one to respond.

4. Hashing Methods

Hashing methods will use an IP address, or IP Hash, to determine where to go. It will figure out where the end-user is and send that traffic to the server that most closely matches the client IP address. On average, the closer you are to a server geographically, the faster your upload and download speeds will be.

This principle is the basis for the IP hashing methods of load balancing, which send the client to the closest available servers to their IP in the hopes that it will, therefore, be the fastest.

FAQs

1. Is load balancing a software?

There is a software load balancer and hardware load balancers. Typically, software load balancing is provided as a feature of an application delivery controller (ADC) running on a standard server or virtual machine. A hardware load balancing device (HLD) is a piece of hardware that is completely self-contained and runs load balancing software.

2. What is load balancing and why is it important?

Load balancing's primary objective is to prevent any single server from becoming overloaded and possibly failing. In other words, load balancing enhances server availability and contributes to the prevention of downtime.

3. Why do we need load balancer?

We require a load balancer because the load balancer is capable of quickly and efficiently routing client requests to all servers. To optimize performance, a load balancer ensures that no server is overloaded. If a server fails, the load balancer routes traffic to the remaining servers until replacement servers are added.

4. What happens if Load Balancer goes down?

If a primary load balancer fails, the secondary load balancer takes over and becomes an active connection. The load balancer in the system communicates via a heartbeat link that continuously monitors its status. If all load balancers fail (or are accidentally configured incorrectly), servers downstream are brought offline until the issue is resolved or you manually route around it.

5. Does load balancing increase speed?

Load balancing does not increase speed, but it can help to improve the overall performance of a system. When a system is overloaded, load balancing can help to distribute the workload more evenly across multiple servers. This can help to improve performance and reduce the amount of time that is needed to complete tasks.

6. What is Global Server Load Balancing?

Global server load balancing (GSLB) is the process of distributing workloads among geographically dispersed servers. This enables effective traffic distribution among widely distant application servers.

7. What are the types of load balancer in cloud computing?

Different Types of Load Balancing in Cloud Computing:

- Static Load Balancing.

- Dynamic Load Balancing.

- Round Robin Load Balancing.

- Weighted Round Robin Load Balancing.

- Opportunistic Load Balancing.

- Minimum To Minimum Load Balancing.

- Maximum To Minimum Load Balancing.